Category:Language modeling

Jump to navigation

Jump to search

statistical model of structure of language | |||||

| Upload media | |||||

| Subclass of | |||||

|---|---|---|---|---|---|

| Facet of | |||||

| Has use |

| ||||

| |||||

Subcategories

This category has the following 2 subcategories, out of 2 total.

L

T

Media in category "Language modeling"

The following 23 files are in this category, out of 23 total.

-

BERT embeddings 01.png 1,426 × 532; 36 KB

-

BERT input embeddings.png 1,338 × 344; 13 KB

-

BERT masked language modelling task.png 1,426 × 708; 40 KB

-

BERT next sequence prediction task.png 1,426 × 738; 34 KB

-

Decoder self-attention with causal masking, detailed diagram.png 1,426 × 1,000; 40 KB

-

Encoder cross-attention, computing the context vector by a linear sum.png 1,426 × 520; 16 KB

-

Encoder cross-attention, multiheaded version.png 1,426 × 1,150; 64 KB

-

Encoder cross-attention.png 1,426 × 570; 21 KB

-

Encoder self-attention, block diagram.png 1,426 × 520; 19 KB

-

Encoder self-attention, detailed diagram.png 1,426 × 958; 39 KB

-

Latxa logotipoa.jpg 800 × 800; 44 KB

-

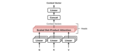

Multiheaded attention, block diagram.png 656 × 600; 32 KB

-

Transformador arquitectura.png 965 × 1,238; 131 KB

-

Transformer decoder, with norm-first and norm-last.png 1,426 × 1,500; 68 KB

-

Transformer encoder, with norm-first and norm-last.png 1,426 × 1,200; 56 KB

-

Transformer, full architecture.png 1,426 × 1,500; 55 KB

-

Transformer, one decoder block.png 1,426 × 796; 24 KB

-

Transformer, one encoder block.png 1,426 × 503; 15 KB

-

Transformer, one encoder-decoder block, detailed view.png 1,426 × 1,040; 43 KB

-

Transformer, one encoder-decoder block.png 1,426 × 791; 33 KB

-

Transformer, stacked layers and sublayers.png 1,426 × 711; 52 KB

-

Transformer, stacked multilayers.png 1,426 × 1,430; 88 KB